OpenAI Gym is a toolset for the development of reinforcement learning algorithms as well as the comparison of these algorithms. It offers a variety of environments that can be utilized for testing agents and analyzing how well they function. Because it is open source, anyone is free to use it and contribute to the program’s ongoing development.

In this blog, we will be discussing all about OpenAI Gym, how to get started with it, popular environments in OpenAI Gym, and its applications.

Table of Content

About OpenAI Gym

OpenAI Gym was first released to the general public in April of 2016, and since that time, it has rapidly grown in popularity to become one of the most widely used tools for the development and testing of reinforcement learning algorithms. Additionally, it has stimulated the development of analogous toolkits for various different kinds of machine learning.

OpenAI Gym is not the only toolbox available for designing, testing, and comparing reinforcement learning algorithms. PyBullet, MuJoCo, and Roboschool are a few examples of further well-known toolkits. Even while every toolkit has its own set of advantages and disadvantages, OpenAI Gym has quickly become one of the most popular and well-respected environments for deep reinforcement learning.

Key features of OpenAI Gym:

- Offers a consistent and simple user interface, making it possible to engage with a wide range of environments.

- Offers a wide range of environments, from simple toy problems to complex video games.

- Enables to compare different algorithms in a standardized way.

- Includes tools for monitoring training progress and visualizing reinforcement learning agent performance.

How Open AI Gym Works?

OpenAI Gym is an open-source library where you can develop and test various reinforcement learning algorithms. In simple terms, Gym provides you with an agent and a standardized set of environments. Agents perform specific tasks or actions when they interact with the environment. In return, the agent receives observations and rewards as a consequence of performing particular actions in the environment.

Here are the four different values that an agent is reverted with by the environment for every “step” taken.

- Observation (object): Observation is a one among the specific value that the environment returns back to the agent. It can either be simpler or complex at times, depending on the environment. For instance, a board state in a board game, car’s speed and position in the Mountain-Car environment, or a raw pixel data in some visual-based tasks.

- Reward (float): Here the agents are rewarded with the amount of reward or score achieved by the last action. Though it varies from environment to environment, but the end goal is always to increase your total reward/score.

- Done (boolean): It informs whether the agent is done with the action or not, whether it’s time to reset the environment again. For instance, you lost your last life in the game.

- Info (dict): It means the data of the diagnostic information useful for debugging. It’s typically used for troubleshooting and understanding what went wrong if the agent is not performing as expected. “Info” can be helpful for tracking the agent’s behavior and decisions during training. However, official evaluations of your agent are not allowed to use this for learning.

Getting Started With OpenAI Gym

Installing OpenAI Gym on your computer is the first step to get started with it. OpenAI Gym can be installed on any platform that supports Python. The following are the steps to install OpenAI Gym:

- Step-1: Install Python 3.x:

Python 3.x must be installed on your computer before using OpenAI Gym. Python can be downloaded from the official website. The installation instructions can then be followed.

- Step-2: Install the required dependencies:

OpenAI Gym requires several dependencies to be installed, including NumPy, pyglet, and Box2D. You can install these dependencies using pip, the package installer for Python.

- Step-3: Install OpenAI Gym:

Once done installing Python and the required dependencies, now you can install OpenAI Gym using pip. You have to run the following command in your terminal to install OpenAI Gym:

‘pip install gym’

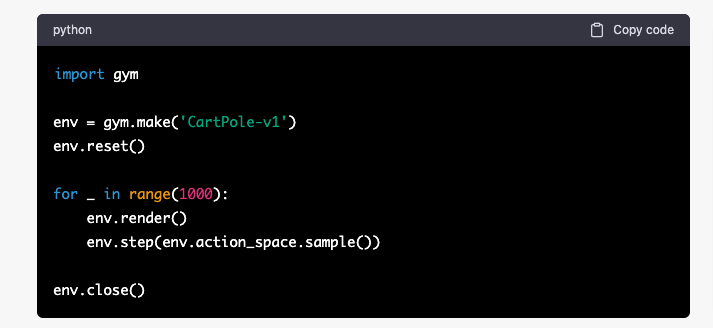

After installation, you can start using OpenAI Gym by importing it into your Python environment. Here’s an example of how to create an instance of the CartPole-v1 environment and take random actions:

import gym

env = gym.make(‘CartPole-v1’)

env.reset()

for _ in range(1000):

env.render()

env.step(env.action_space.sample())

env.close()

In this example, we develop and reset a CartPole-v1 environment instance. Then, we perform random operations in the environment and display the results on the screen. Then, we close the environment when we are finished.

Ultimately, OpenAI Gym provides a simple and approachable toolkit for anyone interested in starting out in reinforcement learning. You can quickly install OpenAI Gym on your computer by following these instructions, and you can then start developing and testing deep RL algorithms.

Popular Environments in OpenAI Gym

For the development and testing of reinforcement learning algorithms, OpenAI Gym provides a wide range of environments. The environments range from easy-to-solve games to those that demand substantial processing power.

The following are some of the popular environments in OpenAI Gym:

1. Classic Control: These environments include well-known control issues like CartPole, MountainCar, and Acrobot. These simple environments are ideal for testing fundamental reinforcement learning methods.

2. Atari Games: Pong, Breakout, and Space Invaders are a few of the Atari games available in OpenAI Gym. Because these settings are increasingly complex, effective reinforcement learning algorithms must be more sophisticated.

3. Robotics: OpenAI Gym includes several environments for robotics tasks, such as Fetch and Hand. These environments are particularly difficult as they require a trifecta of vision, control, and planning.

4. Board Games: Many board games, including Go and Chess, are included in OpenAI Gym. Since these settings demand long-term planning and strategic thinking, they pose major difficulties for reinforcement learning systems.

5. MoJoCo: OpenAI Gym includes several environments that use the MuJoCo physics engine, such as Humanoid and Hopper. These environments are particularly challenging as they require precise control and physics simulation.

Future of OpenAI Gym

The future of OpenAI Gym seems promising. The open-source nature of OpenAI Gym is one of its main benefits because it enables researchers and developers to contribute to its development and enhance its features. Future developments to OpenAI Gym include numerous new features and enhancements, such as:

New environments

Although there are many environments in OpenAI Gym for testing reinforcement learning algorithms, there is always a need for more. We may anticipate the addition of additional and challenging environments to OpenAI Gym as the area of reinforcement learning develops.

Better integration with other libraries

TensorFlow, PyTorch, and Keras are some of the well-known libraries that OpenAI Gym is compatible with. We can anticipate even improved interaction with these libraries in the future, making it simpler for researchers and developers to use OpenAI Gym in their work.

Improved performance

There is always potential for development, even though OpenAI Gym is currently a high-performance toolset. We can expect better performance and optimization of OpenAI Gym in the future, making it even more effective for developing and testing reinforcement learning algorithms.

Advanced tools

Even while OpenAI Gym offers a comprehensive collection of tools for creating and testing reinforcement learning algorithms, there is always a need for more advanced tools. Future updates to OpenAI Gym are likely to include more advanced tools, like those for visualizing and examining reinforcement learning agent behavior.

Overall, OpenAI Gym has a promising future, and we can foresee a lot of additions and upgrades to this robust toolset in the years to come. OpenAI Gym will continue to be an important tool for researchers and developers alike as the area of reinforcement learning expands.

FAQs

OpenAI Gym was created exclusively for developing and comparing reinforcement learning algorithms. While other machine learning libraries might provide some reinforcement learning support, OpenAI Gym stands out for concentrating on this particular field of machine learning.

You must develop a Python class that implements the OpenAI Gym environment interface in order to build your own unique gym environment. This class should define the observation space, action space, and reward function for your environment, as well as any additional methods that your environment requires. With the register() function, you can register your environment class with OpenAI Gym once it has been defined. You can utilize your environment in OpenAI Gym just like any other environment after registering it. This way, you can create custom environments that are tailored to your specific needs and objectives, allowing you to explore and experiment with your own environments.

OpenAI Gym provides a platform to develop and test different reinforcement learning agents. Some of the most well-liked ones are CartPole, MountainCar, and Atari games. These environments are popular because, despite being relatively easy to set up, they still provide interesting challenges for reinforcement learning algorithms.

To Conclude

OpenAI Gym is a powerful toolkit for developing and testing reinforcement learning algorithms. It starts from installing to exploring its features. You can experiment and test reinforcement learning algorithms to check their performance in different environments.

OpenAI Gym is set to grow and improve in future as well with new environments, better integration with other libraries, and more advanced tools. OpenAIGym has the potential to drive advancements in reinforcement learning. Whether you are a researcher or a newcomer in this field, OpenAI is worth giving a try.