The most recent release from OpenAI, which has been making headlines recently, is expected to take the world by storm. The release of GPT-4, the most recent innovation from OpenAI, has been officially announced.

The successor to the highly successful ChatGPT, GPT-4, is being heralded as a massive breakthrough in AI technology. According to OpenAI, GPT-4 is its “most advanced system, producing safer and more useful responses” than previous GPT models. It is rumored to have multimodal capabilities, which enable this AI technology to handle text, images, and even video inputs.

However, what precisely is GPT-4, and what’s new in it? We’ll go in-depth about all you need to know regarding this ground-breaking AI technology in this blog.

Table of Content

What is GPT-4?

GPT-4 is the most recent and sophisticated language model from OpenAI. This generative pre-trained transformer is the successor to the extremely popular GPT-3 model, which was introduced in 2020 and rapidly became well-known for its capacity to produce high-quality text in a range of applications.

GPT-4, like its predecessor, is built on a deep learning algorithm that uses a huge amount of training data to understand how to generate text that is similar to human speech. With rumors of having trillions of parameters compared to GPT-3’s 175 billion parameters, it is substantially more powerful than GPT-3.

Due to its higher parameter count, GPT-4 has the ability to process much more nuanced instructions. Through this, it can produce factual responses and create text that is more logical and nuanced across a range of circumstances. This makes it even more realistic and intelligent than GPT-3.

Moreover, it is rumored that GPT-4 is a large multimodel. This means that it produces replies in response to not just text-based inputs but also video or image inputs.

Availability, Integration, & Pricing

Availability: At present, it is only accessible to customers with a ChatGPT Plus premium membership, and GPT-3.5 will continue to be used in ChatGPT’s free version.

Integration: GPT-4 is also offered as an API for developers to develop programs and services. Several businesses, like Stripe, Duolingo, Khan Academy, and Be My Eyes, have already integrated GPT-4 into their products.

Public Demonstration: The first public GPT-4 demonstration was also live-streamed by OpenAI on YouTube, highlighting some of the innovative new GPT-4 features. Although the exact schedule for GPT-4’s wider availability is yet unknown, its initial release represents an important leap forward for the field of AI models.

API Pricing:

- The model generates 8K context windows (approximately 13 pages of text) that will cost $0.03 for 1K prompt tokens and $0.06 per 1K completion tokens.

- Similarly, when the model generates a 32K context window (about 52 pages of text), the price per 1K prompt tokens and completion tokens are $0.06 and $0.12, respectively.

Key Highlights of GPT-4

Advanced creativity, visual input, and longer context

In three crucial areas—creativity, visual input, and longer context—this model is highly advanced, according to OpenAI.

Creativity: Creative projects like music, screenplays, technical writing, and even understanding a user’s writing style are all things that GPT-4 is superior at generating and working on with users.

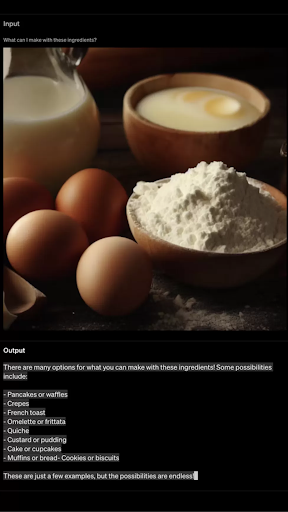

Visual input: GPT-4 can handle both text and image inputs, unlike ChatGPT’s previous versions. Model is capable of captioning and deciphering complicated visual inputs such as recognizing a lightning cable adaptor from a picture of an iPhone that is connected.

The chatbot is shown an image of a few baking ingredients in the example supplied on the GPT-4 website, and it is then asked what can be produced with them. This was the response:

Source: BBC

Although Microsoft allegedly hinted at the arrival of video input capabilities at a recent AI event, OpenAI has yet to demonstrate it.

Longer context: The new generative pre-training model, GPT-4 can handle user-provided text up to 25,000 words in length. In order to create long-form content creation and write lengthy dialogues, it can interact with text from web links.

OpenAI claims through a video that GPT-4 can take longer text inputs than its predecessors, accepting and producing up to 25,000 words as opposed to ChatGPT’s 3,000 words.

Safer than its predecessor

In OpenAI’s internal testing, GPT-4 proved to be safer than previous GPT models. It is able to provide 40% more factual responses while being 82% less likely to reply to requests for information that is “disallowed.”

OpenAI has worked with over 50 experts for early human feedback in domains including AI safety implications and security to improve the technology.

Training

This model was trained by OpenAI utilizing data that was both licensed by OpenAI and publicly available data, including data from public websites. It was trained on a supercomputer that was built entirely from scratch in the Azure cloud thanks to a collaboration between OpenAI and Microsoft.

According to the company, OpenAI spent six months iteratively aligning the model, utilizing lessons from the internal adversarial testing program and ChatGPT. The effort resulted in outstanding results on factuality, steerability, and refusal to go outside of guardrails.

Exhibits “human level” performance

It performs at the “human level” on a variety of professional and academic benchmarks. For instance, it successfully completes a simulated bar exam with a score in the top 10% of test takers, compared to GPT-3.5’s score in the bottom 10%.

Multilingual support

The model offers sophisticated, multilingual support and can comprehend and generate text outputs in a wide variety of languages. Businesses that operate globally and need to engage with customers in a variety of languages would benefit the most from this capability.

Early Adopters

Microsoft’s Bing Chat, Stripe, Duolingo, Morgan Stanley, and Khan Academy are a few early GPT-4 adopters.

- Microsoft acknowledged in a blog post that the GPT model that has been powering the new Bing Chat is GPT-4.

- It is being used by Stripe to scan commercial websites and send a report to customer care personnel.

- Morgan Stanley is developing a GPT-4-powered system to gather information from company documents, perform document searches, and deliver it to financial analysts

- Duolingo has integrated GPT-4 into a new language learning subscription tier.

- GPT-4 is being used by Khan Academy to create an automated tutor.

Challenges Faced by GPT-4

OpenAI mentions some of the limitations of the new language model in addition to the new GPT-4 capabilities. Similar to early versions of GPT, the most recent model still exhibits “social biases, hallucinations, and adversarial prompts,” according to OpenAI.

In other words, it’s not flawless, but according to OpenAI, all of these problems are the ones that the company is working to fix.

Future Directions for GPT-4

In the area of natural language processing, GPT-4 is anticipated to bring about a number of advancements and improvements. The following are some possible directions for GPT-4 in the future:

- Multimodal Learning: Multimodal learning, which refers to the capacity to process information from several sources, including text, images, audio, and video, is anticipated to be included in this model. The model will be able to comprehend and produce content in a wider range of complicated scenarios as a result, in comparison to previous GPT models.

- More Efficiency: This model is anticipated to be quicker and more efficient than its predecessors. As a result, it will be able to handle more data and deliver more accurate results in less time.

- Improved Personalization: This generative pre-trained transformer is anticipated to have personalization techniques for generating content that is suited to specific users. As a result, it will be able to provide more appropriate and personalized information for certain applications, such as chatbots and virtual assistants.

- Enhanced Contextual Understanding: GPT-4 is anticipated to have a greater understanding of contextual information, such as tone, emotion, and intent, allowing it to produce responses that are more accurate and pertinent.

- Improved Transfer Learning: A model can use transfer learning to transfer what it has learned from one task and apply it to another. It is anticipated that GPT-4 would outperform its predecessors in terms of transfer learning, making it more effective across a broader spectrum of tasks.

- Ethical Considerations: As GPT-4 develops, it will become more important to address ethical issues, including bias, privacy, and transparency. AI researchers and developers will need to make sure that GPT-4 makes impartial, fair decisions and that user data is protected and used in an ethical manner.

FAQs

With ChatGPT Plus, a premium version of the company’s chatbot, GPT-4 will be accessible in a limited format. Businesses can use it to incorporate it into other products if they make it off the waitlist.

At least for now, the new technology is not accessible for free. OpenAI stated users may try GPT-4 on its $20 per month subscription service, ChatGPT Plus.

Language translation, content creation, chatbots, language-based search engines, and personalized content creation are just a few of the many real-world scenarios or uses for GPT-4.

Conclusion

In conclusion, GPT-4 has a significant potential to enhance NLP and aid in the creation of more sophisticated AI systems. While there are still some challenges that need to be addressed, its promising applications and future directions make it an exciting development to watch out for in the years to come. We can anticipate new and intriguing uses for GPT-4 in the future as researchers continue to push the limits of what is possible in NLP.